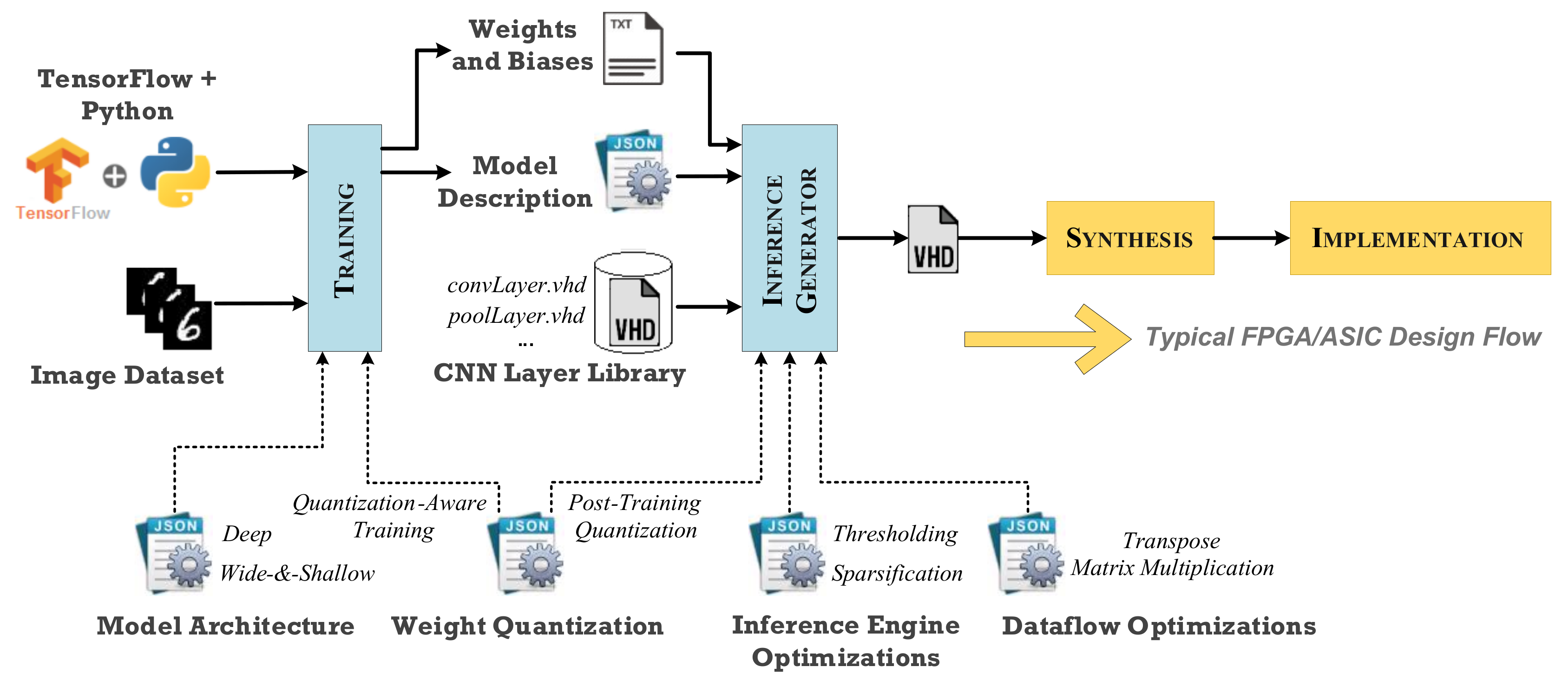

Technologies | Free Full-Text | A TensorFlow Extension Framework for Optimized Generation of Hardware CNN Inference Engines

Inference time in ms for network models with standard (S) and grouped... | Download Scientific Diagram

Speeding Up Deep Learning Inference Using TensorFlow, ONNX, and NVIDIA TensorRT | NVIDIA Technical Blog

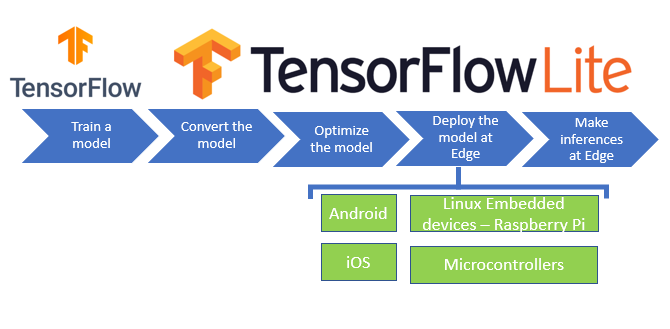

Everything about TensorFlow Lite and start deploying your machine learning model - Latest Open Tech From Seeed

GitHub - dailystudio/tflite-run-inference-with-metadata: This repostiory illustrates three approches of using TensorFlow Lite models with metadata on Android platforms.

![PDF] TensorFlow Lite Micro: Embedded Machine Learning on TinyML Systems | Semantic Scholar PDF] TensorFlow Lite Micro: Embedded Machine Learning on TinyML Systems | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/9fd1684e6d163c89bd2b2887ab1b21b89ad10137/5-Figure2-1.png)

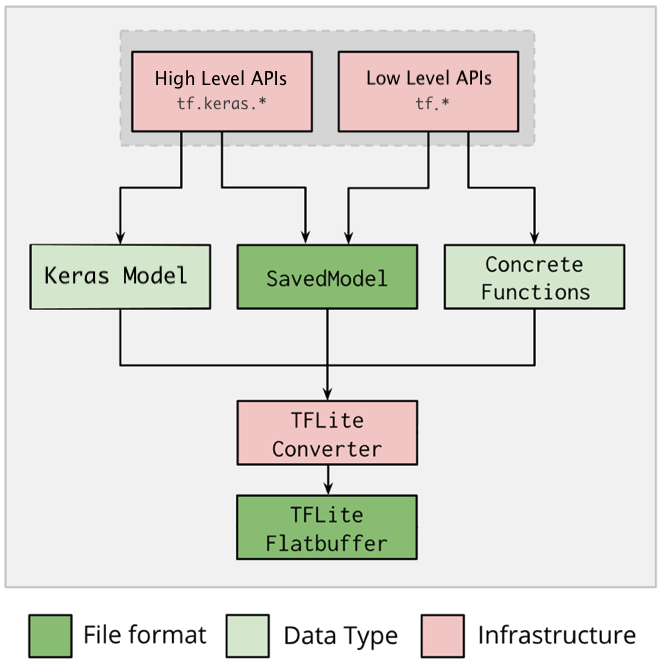

![Converting TensorFlow model to TensorFlow Lite - TensorFlow Machine Learning Projects [Book] Converting TensorFlow model to TensorFlow Lite - TensorFlow Machine Learning Projects [Book]](https://www.oreilly.com/api/v2/epubs/9781789132212/files/assets/1f57c51c-ecf6-471d-ab19-fa155db6ffd1.png)